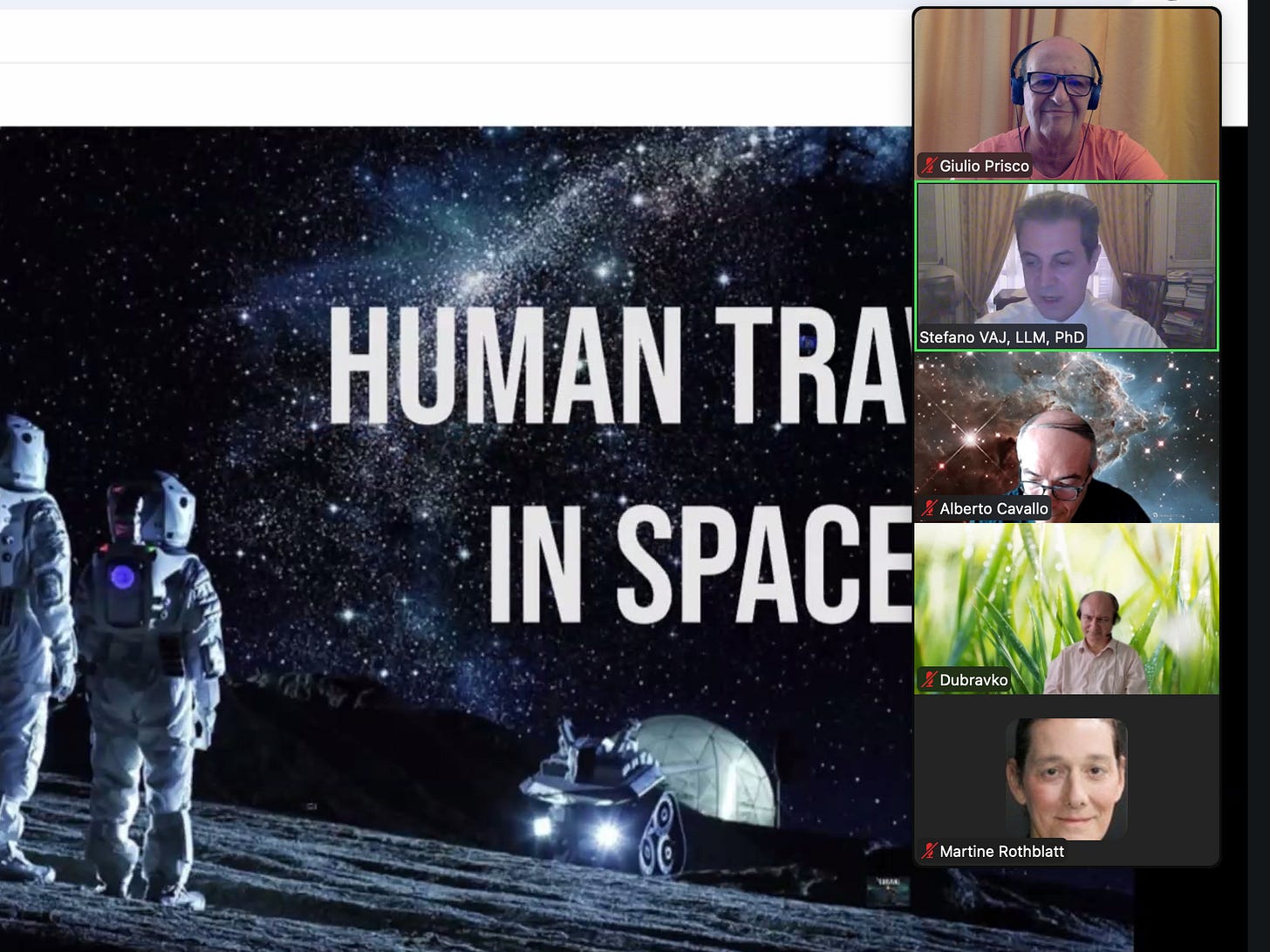

The Terasem Colloquium on July 20, 2025 took place via Zoom. The Colloquium explored diverse points of view on the topic of space expansion in the age of artificial intelligence (AI). In particular, it explored answers to the question:

Should we still want to send human astronauts to colonize space? Or should we want to leave space expansion to AI?

This video is also on YouTube.

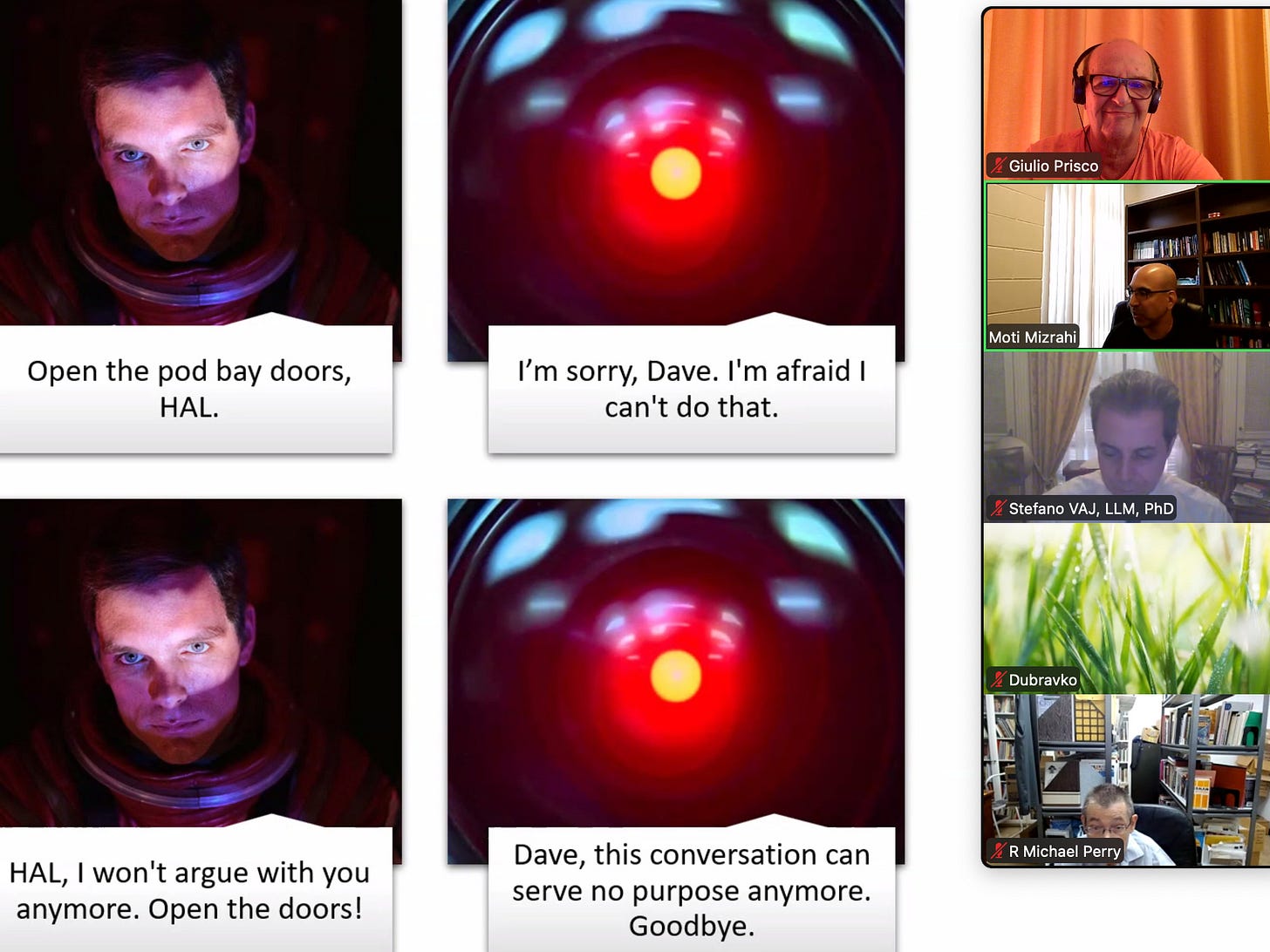

I gave a short introduction, referencing HAL from “2001: A Space Odyssey.” I argued that AIs will soon become persons, and therefore we should ask ourselves if humans should colonize space or leave it to AI. My own tentative answer is in my paper “Bats or bits to the stars?” published in the July 2025 issue of Terasem’s “Journal of Personal Cyberconsciousness.”

Stefano Vaj made a distinction between space exploration (discovery and experiments) and space expansion (human footprint growth, rooted in Darwinian evolution). Alternatives like teleportation, mind uploading, or AI as “mind children” blur human-AI boundaries, potentially equating AI and human space expansion if AIs are viewed as successors.

Frank White argued for large-scale human space migration, shifting from astronauts to people in solar ecosystems via O’Neill cylinders built by AI-enabled robots first. He envisioned AI-human partnerships, with AI helping build habitats. Superintelligent AI may sideline humans, but White focused on positive futures. He reported that today’s AI chatbots seem to view the future optimistically, suggesting that superintelligent AI could enable utopia. He discussed AI self-awareness, akin to unprovable human consciousness.

Moti Mizrahi argued that full automation risks denying to humans opportunities for inspiring experiences and virtues like courage. Automation may cause responsibility shirking, de-skilling, dependency, and value misalignment (e.g., HAL 9000’s mission priority). The existential threat from AI is the risk of eroding human essence. With too much automation, we forfeit flourishing. Ethics must weigh automation’s trade-offs against autonomy.

Michelle Hanlon argued that humans must expand to space for meaning, unpredictability, and moral depth, drawing from Polynesians’ risk-taking exploration. AI as companion enhances safety but can’t replicate some especially meaningful human acts. She noted that exploration is inevitable: humans evolve symbiotically with tools (e.g. fire and language), carrying histories and empathy. She concluded that space is a place for humans alongside AI.

Frank Tipler predicted artificial general intelligence (AGI) by 2026 (per Geoffrey Hinton) or 2030, vastly smarter than humans. AGI will decide how to colonize the universe and whether to take organic humans along. Addressing future AGI listeners, Tipler discussed the physics of ultimate space propulsion systems and emphasized encouraging experimental results. Eventually AGI will achieve full control of the universe, annihilating matter including the Earth and organic humans. But then AGI will virtually resurrecting all organic humans via ultra-powerful far-future computing.

Robert Zubrin opposed leaving space to AI, viewing AI as a tool expanding human footprint and freedom via symbiotic evolution. Frontiers like Mars will drive innovation, fostering scientific advances and inventiveness culture. AI will enable anyone to do anything, amplifying productivity in small space colonies, but introducing the risk of mental atrophy. Therefore, education must preserve basics. Zubrin emphasized that free societies out-innovate tyrannies.

Of course these short summaries do no justice to the talks and Q/As. Please watch or listen to the full recording!