The OpenAI drama

A powerful AI discovery that could threaten humanity?

Greetings to all readers and subscribers, and special greetings to the paid subscribers!

Please scroll down for the main topic of this newsletter. But first:

Mark your calendar! At the Terasem Colloquium on December 14, 2023, 10am-1pm ET via Zoom, stellar speakers will explore recent AI developments (ChatGPT & all that), machine consciousness, and the nature of consciousness. You are invited! Please note that a related issue of Terasem’s Journal of Personal Cyberconsciousness (Vol. 11, Issue 1 - 2023) will be published in December.

Speakers: Ben Goertzel, Stefano Vaj, Mika Johnson, Blake Lemoine, Bill Bainbridge, Vitaly Vanchurin. You are invited to come, listen, and ask questions. Next week I’ll post the Zoom access coordinates.

I’m sure some of the speakers will have something smart to say about the recent OpenAI drama.

Some info and thoughts on the OpenAI drama:

See the OpenAI announcements of Nov. 17 and Nov. 29. A lot has happened in 12 days.

There are rumors that the OpenAI drama was triggered by “a powerful artificial intelligence discovery that they said could threaten humanity,” Reuters reported. According to anonymous sources at OpenAI, “Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI).”

Of course there’s a lot of talk and speculation about Q*. There are no official OpenAI statements, but many think that the name Q* suggests a combination of reinforcement learning methods known as Q-learning and search methods knows as A* (see e.g. “Artificial Intelligence: A Modern Approach”).

Some of the best researched and most thoughtful speculation that I have seen so far come from David Shapiro. See Shapiro’s videos “What is Q*? Speculation on how OpenAI's Q* works and why this is a critical step towards AGI” and “OpenAI's Q* is the BIGGEST thing since Word2Vec... and possibly MUCH bigger - AGI is definitely near” (h/t to David Wood).

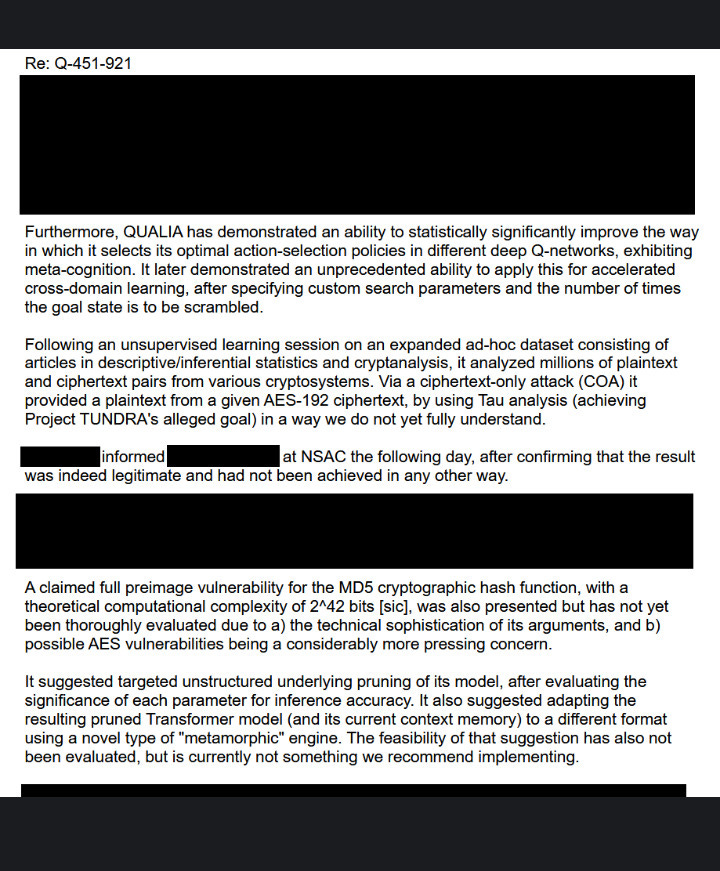

These videos are well researched and show AI research papers to confirm that his speculations are based on ongoing research work. Shapiro shows a letter, allegedly leaked by OpenAI staff, posted to 4chan:

See also “Compilation of Q* (Q-star) 4chan leak” (Google Drive).

“No particular comment on that unfortunate leak,” said Sam Altman in a Nov. 30 interview (it isn’t 100% clear if he is referring to the 4chan leak).

I don’t know how much of this is true. Perhaps all, perhaps a part, perhaps nothing. But if OpenAI’s Q* has really developed entirely new mathematics to break encryption, and if it has really suggested modifications to its own architecture that would make it more efficient (a precursor to runaway self-improvement), then this would be a major breakthrough toward AGI indeed.

Yestarday, Henry Kissinger logged off and moved on. In “The Age of AI: And Our Human Future” (2021), Kissinger and co-authors Eric Schmidt and Daniel Huttenlocher wrote: “Artificial intelligence is not human. It does not hope, pray, or feel. Nor does it have awareness or reflective capabilities. It is a human creation, reflecting human-designed processes on human-created machines…”

“But when we encounter some of AI’s achievements - logical feats, technical breakthroughs, strategic insights, and sophisticated management of large, complex systems - it is evident that we are in the presence of another experience of reality by another sophisticated entity.”

It’s interesting to reflect on whether next-generation AIs will be sentient beings like us, endowed with consciousness and free will. I’ll elaborate on this in my article for Terasem’s Journal of Personal Cyberconsciousness. But regardless, it seems clear to me that next generation AIs will be sophisticated and very, very powerful entities.

I wouldn't trust 4chan for viable seeded as it is well seeded with actual (not what State wants to say is such) disinformation. I have seen two theories I think may have some merit.

1) That Microsoft as sunk billions into OpenAI plus its own AI efforts and saw a way to grab up a lot of OpenAI talent. The investment amount is valid and I have seen Microsoft pull not dissimilar shenanigans over the last 30+ years.

2) The board freaked out that Altman was apparently working on penning deals with UAE without it being formally informed. This would certainly be grounds in most corporations.

I doubt very much they have cracked AGI. As impressive as LLMs are and as reinforcement learning is I don't think a marriage of the two gives you AGI. I don't believe the nut of actual understanding of concepts much less inventing and perfecting new ones has been cracked. Though some may ask whether much of what humans do with our "big brains" is all that much different than what today's AIs are doing. How many humans use formal operations a la Piaget? How many humans understand how many things at their conceptual roots?